Intro

Hey there, You know what's been driving me crazy lately? That damn Python deprecation warning on Windows 10 after upgrading to OCI CLI 3.x. It's been going on for a month or two now, and at first, I gave up. But when my OCI CLI shell scripts prompts started to get ugly, I knew I had to take matters into my own hands. So I did some digging and came up with a fix that's gonna silence all the Python deprecation warning none-sense for good. This probably affects all windows OS’ not just Win 10.

I. How bad is it?

Let's just say it's not pretty. Every time you hit an OCI command on Windows, you're slapped in the face with a big, ugly warning, no matter which argument you use. It's enough to make even the most chill of us cringe.

Impacted environment:

OS : Windows 32/64bit

OCI CLI Version: probably anything above 3.x

Reproduce the issue in Windows:

Run the following installation command in PowerShell as administrator - See installation guide

PS C:\> Set-ExecutionPolicy RemoteSigned

PS C:\> powershell -NoProfile -ExecutionPolicy Bypass -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/oracle/oci-cli/master/scripts/install/install.ps1'))"

PS C:\> oci -v

c:\program files\lib\oracle\oci\lib\site-packages\oci\_vendor/httpsig_cffi/sign.py:10: CryptographyDeprecationWarning: Python 3.6 is no longer supported by the Python core team. Therefore, support for it is deprecated in cryptography and will be removed in a future release. from cryptography.hazmat.backends import default_backend # noqa: F401

II. Root cause

The Python package is clearly no longer supported, as mentioned on the DeprecationWarning message.

While browsing through OCI CLI issues on GitHub, I also found a user's report of the same warning on issue #639.

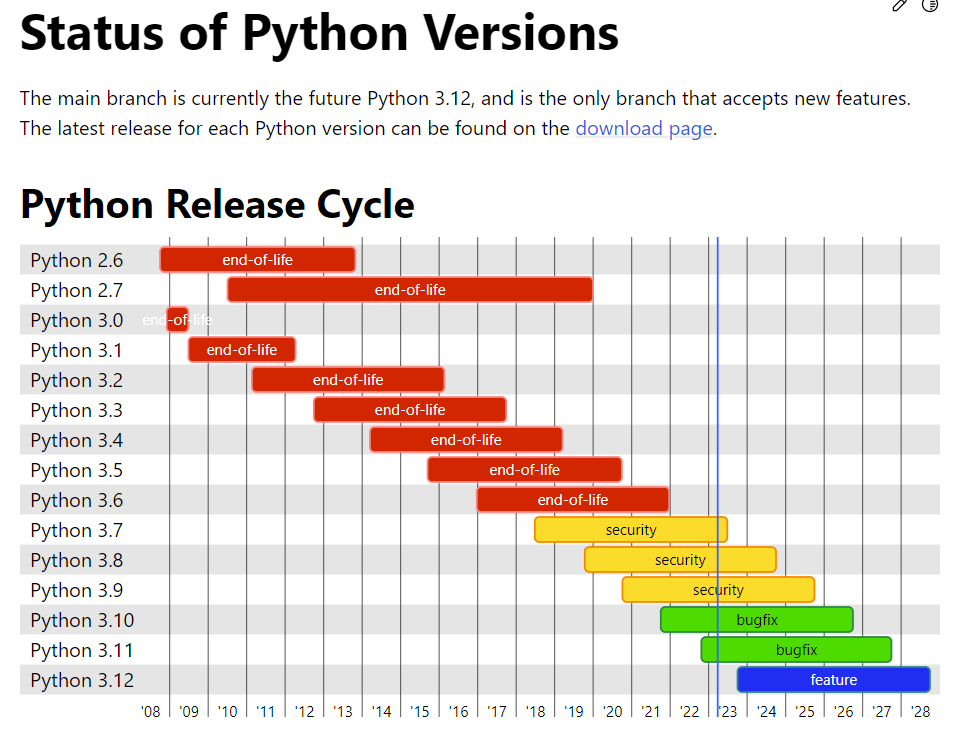

And as expected, It turns out Python 3.6 and anything below 3.10 was toast (soon end of life support).

You can check that out on the Lifetime Support time frames python.org:

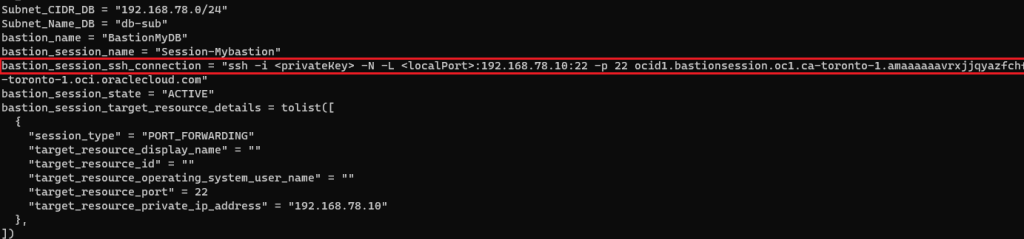

II. Why did OCI CLI Install a Deprecated Python version?

Despite installing the latest version of OCI CLI, the packaged Python version remained outdated (v3.6).

But why and how?

This led me to investigate further and discover an odd thing during the PowerShell install script run.

The actual python installed by oci-cli is 3.8 which is enough to throw the deprecation warning

PS C:\> powershell -NoProfile -ExecutionPolicy Bypass -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/oracle/oci-cli/master/scripts/install/install.ps1'))"

VERBOSE: No valid Python installation found. Python is required to run the CLI. Install Python now? (Entering "n" will exit the installation script) [Y] Yes [N] No [?] Help (default is "Y"): Y VERBOSE: Downloading Python...

VERBOSE: Download Complete! VERBOSE: Successfully installed Python! ...

-- Verifying Python version. -- Python version 3.8.5 okay.

From the python support chart , it’s clear that 3.10 is recommended to avoid deprecation issues.

With that in mind, we need to alter the official install.ps1 script to do what we ask

The section below, explains how

III. Install script forensics & Solution

Open and inspect install.ps1 in PowerShell editor :

Well, well, well, what do we have here? It seems that the Python release is hardcoded in the install.ps1 script, no matter what oci-cli version you're trying to install.

...

72 $PythonInstallScriptUrl = "https://raw.githubusercontent.com/oracle/oci-cli/v3.2.1/scripts/install/install.py"73 $FallbackPythonInstallScriptUrl = "https://raw.githubusercontent.com/oracle/oci-cli/v2.22.0/scripts/install/install.py"

74 $PythonVersionToInstall = "3.8.5" # <<<- version of Python to install if none exists

75 $MinValidPython3Version = "3.6.0" # minimum required version of Python 3 on system

Solution

Apply the fix: I’ll just assign 3.10.10 value to both $PythonVersionToInstall & $MinValidPython3Version

Run the Install script again:

You can see below that a most recent python release has been picked and installed

PS C:\> powershell -NoProfile -ExecutionPolicy Bypass -Command "install.ps1" <<updated ...

-- Verifying Python version. -- Python version 3.10.10 okay. <<<

...===> In what directory would you like to place the install?

===> In what directory would you like to place the 'oci.exe' executable?

===> In what directory would you like to place the OCI scripts?

...━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 97.5/97.5 kB ? eta 0:00:00 Collecting cryptography<40.0.0,>=3.2.1 Downloading cryptography-39.0.2-cp36-abi3-win32.whl (2.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.5/2.5 MB 31.3 MB/s eta 0:00:00

...

VERBOSE: Successfully installed OCI CLI!

After the change we can assume let’s check if this has fixed the problem get rid of the annoying error.

and voila , nice and clean prompt on my windows 11 machine

PS C:\> oci -v

3.24.4

VI. PowerShell users beware

Believe it or not Powershell environment can easily mislead your oci-cli installation too.

How? By mistakenly downloading the 32-bit version of Python instead of the 64-bit version.

And it all happens when you run the install script from the PowerShell x86 environment

As result the below warning will pop each time you run an oci command .

The script relies on a environment variables that checks the OS architecture ( 64 or 32bit)

If you run the script from an x86 PowerShell terminal it’ll assume your OS is 32bit & install a 32bit Python.

//// From a x86 prompt

PS C:\WINDOWS\system32> Invoke-Expression [IntPtr]::Size

4

//// From a 64bit Prompt

PS C:\WINDOWS\system32> Invoke-Expression [IntPtr]::Size

8

__________________________________________________________________________________//// the install.ps1 script checks the OS kernel architecture (line :200)

# IntPtrSize == 8 on 64 bit machines$IntPtrSize = Invoke-Expression [IntPtr]::Size

if ($IntPtrSize -eq 8) { $PythonInstallerUrl = "https://www.python.org/ftp/python/$Version/python-$Version-amd64.exe"}

Wrong result (4 instead of 8) => wrong version instead (32bit)

Pro-tip

Always run this command first to make sure you're using the 64-bit PowerShell environment.

//// From a x86 prompt

PS C:\WINDOWS\system32> [Environment]::Is64BitProcess

False

//// From a 64bit Prompt

PS C:\WINDOWS\system32> [Environment]::Is64BitProcess

True

V. How to use my script until Oracle fixes the problem

Very easy. I've uploaded my modified script to my GitHub repo with a little tweak to download Python 3.10 instead.

Run the same command but use my script URL instead

PS C:\> Set-ExecutionPolicy RemoteSigned

PS C:\> powershell -NoProfile -ExecutionPolicy Bypass -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/brokedba/oci-cli-examples/master/installation_win64/install.ps1'))"

PS C:\> oci -v

CONCLUSION

This solution to the issue of deprecated Python warnings after installing/upgrading OCI CLI on Windows 10 will hopefully help those who still experience the problem.

my workaround can be used until oci cli maintainers get to tackle in a future pull request

The following Note:OCI SDK - Python 3.6 is No Longer Supported by the Python Core Team (Doc ID 2902080.1) acknowledges the issue but doesn’t provide a workaround.

If this happens on Linux you might want to use Python virtual environment to avoid messing with your server’s setup (see blog from S.Petrus)

PS C:\> Set-ExecutionPolicy RemoteSigned

PS C:\> powershell -NoProfile -ExecutionPolicy Bypass -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/brokedba/oci-cli-examples/master/installation_win64/install.ps1'))"

PS C:\> oci -v

Intro

Intro