Intro

One of the advantages of Oracle grid Infrastructure is to restart all managed resources (databases, asm, listeners,…etc) automatically whenever you (re)start the cluster service (crs) or reboot the whole server.

I recently had to patch an oda where the client still had few listeners (with non default ports) managed locally and not through grid. This means that anytime there is maintenance or a reboot those listeners would require a manual restart. I ,hence, needed to convert them into Clusterware resources so we wouldn’t worry about restarting them manually after the patch.

Downtime?

Existing connections won’t be interrupted but new connections will wait for the database services to register back to the new listeners recreated in the Clusterware as the old ones will be stopped during the process. We obviously can’t add a listener when another listener is running with the same port.

Use case

This was done on a single node ODA where single instance databases were deployed but still managed by the grid infrastructure software. There were 3 listeners(1522,1523,1524) defined with a non default port and a couple of instance configured to be registered to those listeners.

Service registration

The mentioned instances services were registered to the non default listeners using “local_listener” parameter. Normally, you don't have to set listener parameters, neither local_listener nor remote_listener because the background process LREG will register services with the default local and remote 1521 listener automatically. With a different port,however, local_listener must be set to point to the listeners’ addresses (i.e port 1522).

- In my case each of the instances had the local_listener set to a similar entry to the below, depending on the port.

SQL> show parameter local_listener

NAME TYPE VALUE

------------------------------------ ----------- --------------------------------------

local_listener string (ADDRESS=(PROTOCOL=TCP)(HOST=odaprd1)

(PORT=1522))

Note: You can also resolve the LOCAL_LISTENER address to a listener alias defined in the tnsnames file on the database host

THE STEPS :

Below steps were ran as grid user but in case of a single ownership (oracle user) for grid and database software you can use oracle.

1. Backup the existing listener file

[grid@odaprd] cp $GRID_HOME/network/admin/listener.ora ~/listener.ora.bkp

2. Stop the listeners in question

[grid@odaprd1]$ export ORACLE_HOME=/u01/app/18.0.0.0/grid

[grid@odaprd1]$ lsnrctl stop LISTENER_1522

[grid@odaprd1]$ lsnrctl stop LISTENER_1523

[grid@odaprd1]$ lsnrctl stop LISTENER_1524

3. Edit the listener file

[grid@odaprd] vi $ORACLE_HOME/network/admin/listener.ora

Delete the entries related to the listeners we stopped to avoid conflicts with the ones created via srvctl add listener

LISTENER_1522=(DESCRIPTION_LIST=...

ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_1522=ON

...

LISTENER_1523=(DESCRIPTION_LIST=...

ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_1523=ON

...

LISTENER_1524=(DESCRIPTION_LIST=...

ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_1524=ON

4. Add the listeners resources in the crs

Now we can add the listeners at clusterware level. Note that -p argument is likely deprecated and replaced with –endpoints

[grid@odaprd] srvctl add listener -l LISTENER_1522 -o /u01/app/18.0.0.0/grid -p 1522

[grid@odaprd] srvctl add listener -l LISTENER_1523 -o /u01/app/18.0.0.0/grid -p 1523

[grid@odaprd] srvctl add listener -l LISTENER_1524 -o /u01/app/18.0.0.0/grid -p 1524

5. Confirm that the changes are now effective

There are few ways to verify the status of the newly created listeners. lsnrctl status is one of them and will show the database services that were initially registered to the previous local listeners. The status can also be checked using srvctl

[grid@odaprd] srvctl status listener -l LISTENER_1522

Listener LISTENER_1522 is enabled

Listener LISTENER_1524 is running on node(s): odaprd

[grid@odaprd] srvctl status listener -l LISTENER_1523

Listener LISTENER_1523 is enabled

Listener LISTENER_1523 is running on node(s): odaprd

[grid@odaprd] srvctl status listener -l LISTENER_1524

Listener LISTENER_1524 is enabled

Listener LISTENER_1524 is running on node(s): odaprd

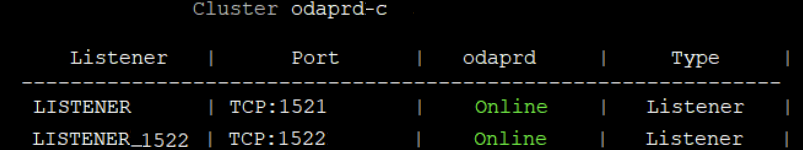

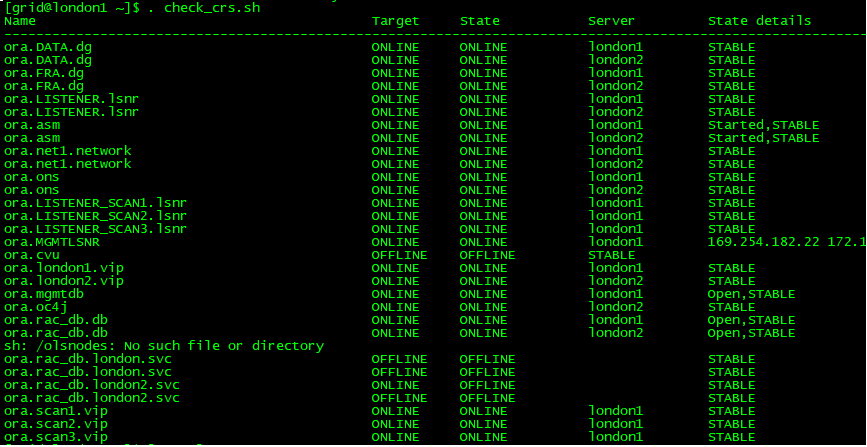

Or you can run Fred Denis’ famous rac-cluster.sh script that shows you the graphical display of your grid resources

[grid@odaprd] ./rac-status.sh -e

Conclusion

It is very easy to move existing listeners defined with non default ports to the clusterware as long as the local_listener parameter is defined. Although I haven’t tried to run srvctl add listener without pre-emptively removing the old entries defined the listener.ora but I am pretty sure that would lead to a conflict and would trigger an error.