Intro

After AWS,Oracle Cloud, and Azure, GCP is the 4th cloud platform in our terraform tutorial series, we will describe what it takes to authenticate and provision a compute engine using their terraform provider. The instance will also have an nginx website linked to its public IP. If you want to know about the differences GCP brings in terms of networking it’s wrapped up on my blog

Note: GCP terraform provider authentication was a hell to get hold on and counter intuitive comparing to other Cloud platforms. I wasted a lot of time just trying to figure if I could avoid hardcoding project id.

Here’s a direct link to my GitHub repo linked to this lab =>: terraform-examples/terraform-provider-gcp

Content :

I. Terraform setup

IV. Partial deployment

V. Full deployment

Tips & Conclusion

Overview and Concepts

Topology

The following illustration shows the layers involved between your workstation and GCP cloud while running the terraform actions along with the instance attributes we will be provisioning.

- Files are merged in alphabetical order but resource definition order doesn't matter (subfolders are not read).

- Common configurations have 3 type of tf files and a statefile.

- 1- main.tf: terraform declaration code (configuration) . The file name can be anything you choose

2- variables.tf: Resource variables needed for the deploy

3- outputs.tf: displays the resources detail at the end of the deploy

4- terraform.tfstate: keeps track of the state of the stack(resources) after each terraform apply run

Component "Provider_Resource_type" "MyResource_Name" { Attribute1 = value ..

Attribute2 = value ..}Example for a VPC >>

1- Create a shell resource declaration for the vpc in a file called vpc.tf

2- Get the id of the vpc resource from your GCP portal

3- Run the Terraform import then run Terraform show to extract the vpc full declaration from GCP to the same file (vpc.tf)

4- Now you can remove the id attribute with all non required attributes to create a vpc resource (Do that for each resource)

1- # vi vpc.tf

provider "google" {

features {}

}

resource "google_compute_network" "terra_vpc" {

}

2- # terraform import google_compute_network.terra_vpc {{project}}/{{name}}

3- # terraform show -no-color > vpc.tf Note:

If you want to import all the existing resources in your account in bulk mode terraformer can help import both code and state from your GCP account automatically.

Terraform lab content: I purposely split this lab in 2 for more clarity

- VPC Deployment: To grasp the basics of a single resource deployment.

- Instance Deployment: Includes the instance provisioning configured as web sever(includes above vpc) .

I.Terraform setup

I tried the lab using WSL (Ubuntu) terminal from windows but same applies to Mac.

Windows: Download and run the installer from their website (32-bit ,64-bit)

Linux: Download, unzip and move the binary to the local bin directory

$ wget https://releases.hashicorp.com/terraform/1.0.3/terraform_1.0.3_linux_amd64.zip

$ unzip terraform_1.0.3_linux_amd64.zip

$ mv terraform /usr/local/bin/Once installed run the version command to validate your installation

$ terraform --version

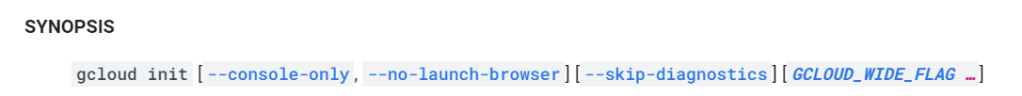

Terraform v1.0.3To authenticate to GCP with Terraform you will need GCloud, service account credentials key file, and the projectId

Prerequisites

- Using dedicated service accounts to authenticate with GCP is recommended practice (not user accounts or API keys)

- GCLOUD authentication configured with your GCP credentials. Refer to my Blog post for more details

Service account: Either you create a service account with “owner role” in the console or run the below cli commands$ gcloud auth login --activate

…$ gcloud config list --format='table(account,project)'

ACCOUNT PROJECT

-------------- -------------

bdba@gmail.com brokedba2000

1 -- Create service account

$ gcloud iam service-accounts create terraform-sa --display-name="Terra_Service"

$ gcloud iam service-accounts list --filter="email~terraform" --format='value(email)'

2 -- Bind it to a project and add owner role

$ gcloud projects add-iam-policy-binding PROJECT_ID --member="serviceAccount:email" --role="roles/owner"

3 -– Generate the Key file for the service account

$ gcloud iam service-accounts keys create ~/gcp-key.json --iam-account=email$ ssh-keygen -P "" -t rsa -b 2048 -m pem -f ~/id_rsa_az

Generating public/private rsa key pair.

Your identification has been saved in /home/brokedba/id_rsa_az.

Your public key has been saved in /home/brokedba/id_rsa_az.pub.II. Clone the repository

- Pick an area that is close to your gcp-terraform directory on your file system and issue the following command.

$ git clone https://github.com/brokedba/terraform-examples.git

terraform-provider-gcp/create-vpc/To grasp how we deploy a single Vpc.terraform-provider-gcp/launch-instance/For the final instance deploy.

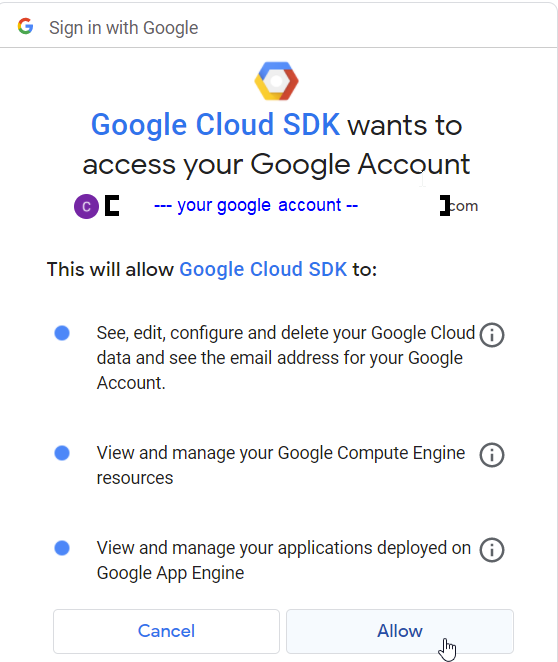

III. Provider setup

INSTALL AND SETUP THE GCP PROVIDER

- Cd Into “

terraform-provider-gcp/create-vpc”where our configuration resides (i.e vpc) - GCP provider plugin will be automatically installed by running

”terraform init”. - Let's see what's in the

”create-vpc”directory. Here, only*.tffiles matter (click to see content)

$ cd /brokedba/gcp/terraform-examples/terraform-provider-gcp/create-vpc $ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/google...

- Installing hashicorp/google v3.88.0...

* Installed hashicorp/google v3.88.0 (signed by HashiCorp)

Terraform has been successfully initialized!

$ terraform --version

Terraform v1.0.3 on linux_amd64

+ provider registry.terraform.io/hashicorp/google v3.88.0 $ tree

.

|-- outputs.tf ---> displays resources detail after the deploy

|-- variables.tf ---> Resource variables needed for the deploy

|-- vpc.tf ---> Our vpc terraform declaration

IV. Partial Deployment

DEPLOY A SIMPLE VPC

- Once the authentication is setup and provider installed , we can run

terraform plancommand to create an execution plan (quick dry run to check the desired end-state).

- The output being too verbose I deliberately kept only relevant attributes for the VPC resource plan$ terraform plan

var.prefix The prefix used for all resources in this example

Enter a value: Demo Terraform used selected providers to generate the following execution plan.

Resource actions are indicated with the following symbols: + create ------------------------------------------------------------------------ Terraform will perform the following actions: # google_compute_network.terra_vpc will be created

+ resource "google_compute_firewall" "web-server"

{..

+ name = "allow-http-rule"

+ allow {

+ ports = [+ "80", + "22",+ "443",+ "3389",]

+ protocol = "tcp"

...

# google_compute_firewall.web-server will be created + resource "google_compute_firewall" "web-server" { {..}

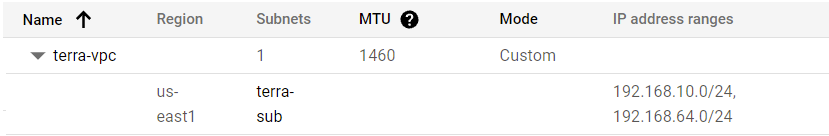

# google_compute_subnetwork.terra_sub will be created + resource "google_compute_subnetwork" "terra_sub"

{..

ip_cidr_range = ["192.168.10.0/24” ]

...}Plan: 3 to add, 0 to change, 0 to destroy. - Next, we can run

”terraform deploy”to provision the resources to create our VPC (listed in the plan)

$ terraform apply -auto-approve

google_compute_network.terra_vpc: Creating...

google_compute_firewall.web-server: Creating...

google_compute_subnetwork.terra_sub: Creating...

...

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Outputs:

project = "brokedba2000"

Observations:

- The deploy started by loading the resources variables in variables.tf which allowed the execution of vpc.tf

- Finally terraform fetched the attributes of the created resources listed in outputs.tf

Note: We’ll now destroy the VPC as the next instance deploy contains the same VPC specs.

$ terraform destroy -auto-approve

Destroy complete! Resources: 3 destroyed.

V. Full deployment (Instance)

OVERVIEW

- After our small intro to VPC creation, let's launch a vm and configure nginx in it in one command.

- First we need to switch to the second directory

terraform-provider-gcp/launch-instance/

Here's the content: - Cloud-init: is a cloud instance initialization method that executes scripts upon instance Startup. see below metadata entry of the vm instance definition (startup-script). There are 5 OS’ scripts (Centos,Ubuntu,Windows,RHEL,SUSE) windows was not tested.

...variable "user_data" { default = "./cloud-init/centos_userdata.txt"}

$ vi compute.tf resource "google_compute_instance" "terravm" {

metadata = {startup-script = ("${file(var.user_data)}")... - In my lab, I used cloud-init to install nginx and write an html page that will replace the HomePage at Startup.

- Make sure you your public ssh key is in your home directory or just modify the path below (see variables.tf)

LAUNCH THE INSTANCE

- Once in “

launch-instance”directory, you can run the plan command to validate the 9 resources required to launch our vm instance. The output has been truncated to reduce verbosity

$ tree ./terraform-provider-gcp/launch-instance

.

|-- cloud-init --> SubFolder

| `--> centos_userdata.txt --> script to config a webserver the Web homepage

| `--> sles_userdata.txt --> for SUSE

| `--> ubto_userdata.txt --> for Ubunto

| `--> el_userdata.txt --> for Enteprise linux distros

|-- compute.tf ---> Compute engine Instance terraform configuration

|-- outputs.tf ---> displays the resources detail at the end of the deploy

|-- variables.tf ---> Resource variables needed for the deploy

|-- vpc.tf ---> same vpc we deployed earlier

Note: As you can see we have 2 additional files and one Subfolder. compute.tf is where the compute instance and all its attributes are declared. All the other “.tf” files come from my vpc example with some additions for variables.tf and output.tf

$ vi compute.tf

resource "google_compute_instance" "terravm" {

metadata = {

admin_ssh_key {

ssh-keys = var.admin":${file("~/id_rsa_gcp.pub")}" ## Change me

$ terraform plan

------------------------------------------------------------------------

Terraform will perform the following actions:

... # VPC declaration (see previous VPC deploy)

...

# google_compute_instance.terra_instance will be created

+ resource "google_compute_instance" "terra_instance" {

+ ...

+ hostname = "terrahost"

+ machine_type = "e2-micro"

+ name = "Terravm"

+ tags = [ + "web-server", ]

+ boot_disk {

+ initialize_params { + image = "centos-cloud/centos-7"

+ network_interface {

...

+ network_ip = "192.168.10.51"

}

+ metadata = {

+ "ssh-keys" = <<-EOT ssh-rsa AAAABxxx…*

EOT

+ "startup-script" = <<-EOT”

EOT}

# google_compute_address.internal_reserved_subnet_ip will be created

+ resource "google_compute_address" "internal_reserved_subnet_ip" {

...}

...

}

Plan: 5 to add, 0 to change, 0 to destroy.

- Now let’s launch our CENTOS7 vm using terraform apply (I left a map of different OS ids in the variables.tf you can choose from)

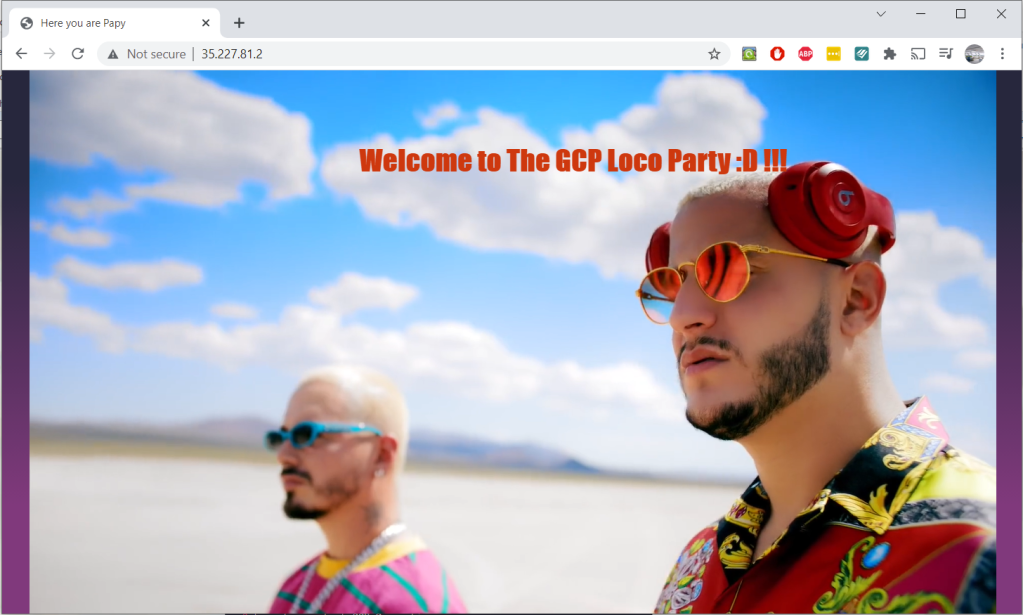

- Once the instance is provisioned, juts copy the public IP address(i.e 52.191.26.102) in chrome and Voila!

- You can also tear down this configuration by simply running terraform destroy from the same directory

- You can fetch any of the specified attributes in outputs.tf using terraform output command i.e:

- Terraform Console:

Although terraform is a declarative language, there are still myriads of functions you can use to process strings/number/lists/mappings etc. There is an excellent all in one script with examples of most terraform functions >> here - I added cloud-init files for different distros you can play with by adapting var.user_data & var.OS

- We have demonstrated in this tutorial how to quickly deploy a web server instance using terraform in GCP and leverage Cloud-init (Startupscript) to configure the vm during the bootstrap .

- We had to hardcode the projectId although it’s embedded in the config credentials (key file) which is makes it tedious and rigid

- Remember that all used attributes in this exercise can be modified in the

variables.tffile. - Route table and internet gateway didn’t need to be created

- Improvement: Validate that startup script works for windows too.

Another improvement can be reached in terms of display of the security rules using formatlist .

stay tuned

$ terraform apply -auto-approve

...

google_compute_network.terra_vpc: Creating...

google_compute_firewall.web-server: Creating...

google_compute_subnetwork.terra_sub: Creating...

google_compute_address.internal_reserved_subnet_ip: Creating...

google_compute_instance.terra_instance: Creating...

Apply complete! Resources: 5 added, 0 changed, 0 destroyed.

Outputs:

vpc_name = "terra-vpc"

Subnet_Name = "terra-sub"

Subnet_CIDR = "192.168.10.0/24"

fire_wall_rules = toset([

{…

"ports" = tolist([

"description" = "RDP-HTTP-HTTPS ingress trafic"

"destination_port_ranges" = toset([

"80",

"3389",

"443",

"3389",])

]

hostname = "terrahost.brokedba.com"

project = "brokedba2000"

private_ip = "192.168.10.51"

public_ip = "35.227.81.2"

SSH_Connection = "ssh connection to instance TerraCompute ==> sudo ssh -i ~/id_rsa_gcp centos@35.227.81.2"

Tips

$ terraform output SSH_Connection

ssh connection to instance TerraCompute ==> sudo ssh -i ~/id_rsa_gcp centos@ ’public_IP’ CONCLUSION

Thank you for reading!